Visualizations in 3D

The Ouster Python SDK provides two visualization utilities for user convenience. These are introduced briefly below.

Visualization with Ouster’s simple-viz

Ouster’s OpenGL-based visualizer allows for easy visualization from pcaps and sensors on all platforms the Ouster SDK supports.

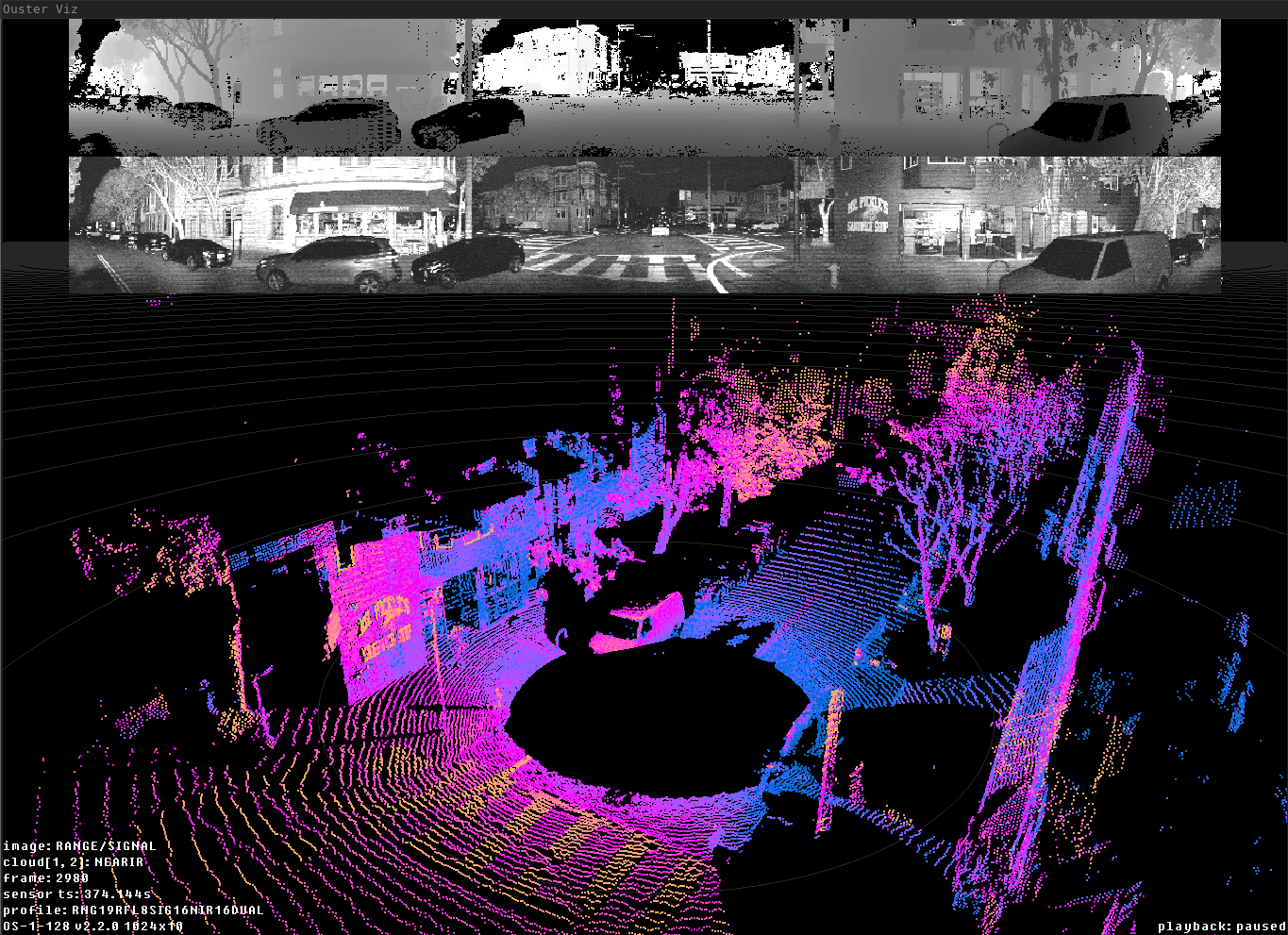

The default Ouster simple-viz visualizer view includes two 2D range images atop which can be

cycled through the available fields, and a 3D point cloud on the bottom. For dual returns sensors,

both returns are displayed by default.

Ouster simple-viz visualization of OS1 128 Rev 6 sample data

The visualizer can be controlled with mouse and keyboard:

- Keyboard controls:

Key

What it does

oToggle on-screen display

p/PIncrease/decrease point size

mCycle point cloud coloring mode

bCycle top 2D image

nCycle bottom 2D image

RReset camera

e/EIncrease/decrease size of displayed 2D images

'/"Increase/decrease spacing in range markers

wCamera pitch up

sCamera pitch down

aCamera yaw left

dCamera yaw right

1Toggle first return point cloud visibility

2Toggle second return point cloud visibility

0Toggle orthographic camera

=/-Dolly in/out

(space)Toggle pause

./,Step one frame forward/back

ctrl + ./,Step 10 frames forward/back

>/<Increase/decrease playback rate (during replay)

shiftCamera Translation with mouse drag

shift-zSave a screenshot of the current view

shift-xToggle a continuous saving of screenshots

To run the visualizer with a sensor:

$ simple-viz --sensor $SENSOR_HOSTNAME

This will auto-configure the udp destination of the sensor while leaving the lidar port as previously set on the sensor.

To run the visualizer with a pcap:

$ simple-viz --pcap $SAMPLE_DATA_PCAP_PATH --meta $SAMPLE_DATA_JSON_PATH

Visualization with Ouster’s viz.PointViz

Please refer to PointViz Tutorial & API Usage for details on extending and customizing

viz.PointViz.

Visualization with Open3d

The Open3d library contains Python bindings for a variety of tools for working with point cloud

data. Loading data into Open3d is just a matter of reshaping the numpy representation of a point

cloud, as demonstrated in the examples.pcap.pcap_3d_one_scan() example:

1# compute point cloud using client.SensorInfo and client.LidarScan

2xyz = client.XYZLut(metadata)(scan)

3

4# create point cloud and coordinate axes geometries

5cloud = o3d.geometry.PointCloud(

6 o3d.utility.Vector3dVector(xyz.reshape((-1, 3)))) # type: ignore

7axes = o3d.geometry.TriangleMesh.create_coordinate_frame(

8 1.0) # type: ignore

9

The examples.open3d module contains a more fully-featured visualizer built using the Open3d

library, which can be used to replay pcap files or visualize a running sensor. The bulk of the

visualizer is implemented in the examples.open3d.viewer_3d() function.

Note

You’ll have to install the Open3d package from PyPI to run this example. Note that as of

version 0.13.0, binaries are not yet provided for Python 3.9 or ARM systems.

As an example, you can view frame 84 from the sample data by running the following command:

$ python3 -m ouster.sdk.examples.open3d \

--pcap $SAMPLE_DATA_PCAP_PATH --meta $SAMPLE_DATA_JSON_PATH --start 84 --pause

PS > py -3 -m ouster.sdk.examples.open3d ^

--pcap $SAMPLE_DATA_PCAP_PATH --meta $SAMPLE_DATA_JSON_PATH --start 84 --pause

You may also want to try the --sensor option to display the output of a running sensor. Use the

-h flag to see a full list of command line options and flags.

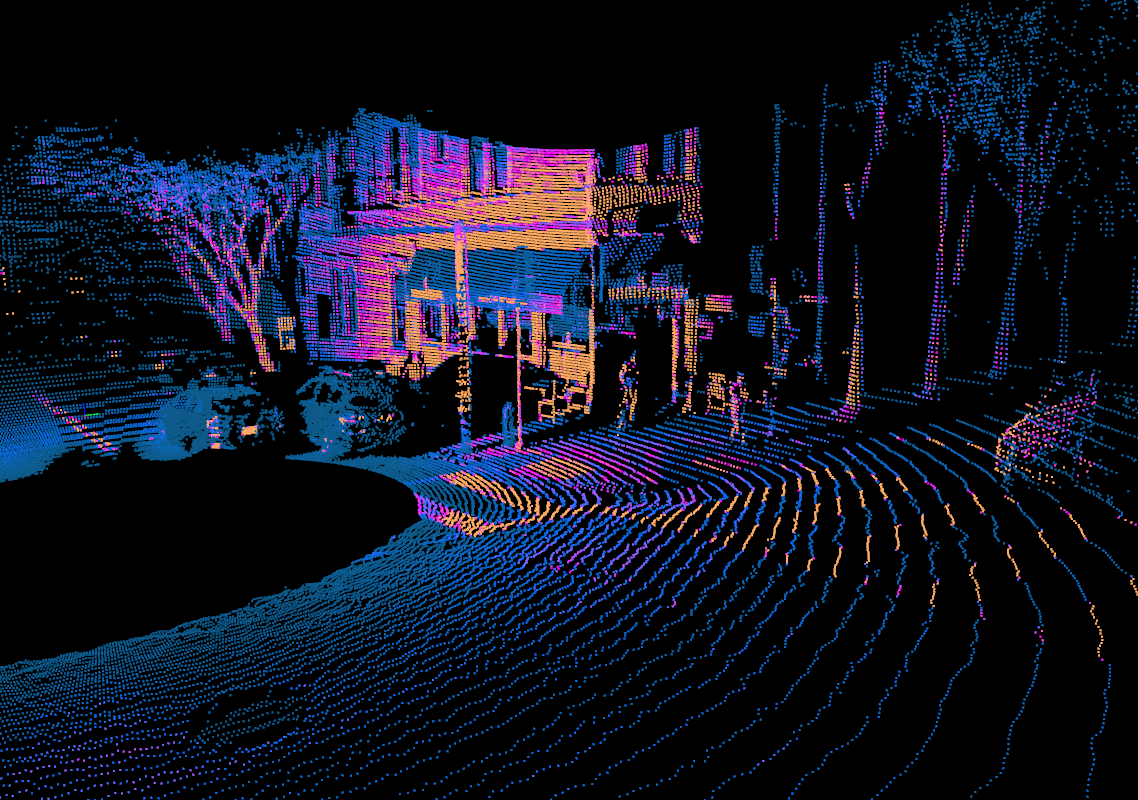

Running the example above should open a window displaying a scene from a city intersection, reproduced below:

Open3D visualization of OS1 sample data (frame 84). Points colored by SIGNAL field.

You should be able to click and drag the mouse to look around. You can zoom in and out using the mouse wheel, and hold control or shift while dragging to pan and roll, respectively.

Hitting the spacebar will start playing back the rest of the pcap in real time. Note that reasonable performance for realtime playback requires relatively fast hardware, since Open3d runs all rendering and processing in a single thread.

All of the visualizer controls are listed in the table below:

Key |

What it does |

|---|---|

Mouse wheel |

Zoom in and out |

Left click + drag |

Tilt and rotate the camera |

Ctrl + left click + drag |

Pan the camera laterally |

Shift + left click + drag |

Roll the camera |

“+” / “-“ |

Increase or decrease point sizes |

Spacebar |

Pause or resume playback |

“M” |

Cycle through channel fields used for visualization |

Right arrow key |

When reading a pcap, jump 10 frames forward |

Visualization with Matplotlib

You should have defined source using either a pcap file or UDP data streaming directly from a

sensor, please refer to Developer’s Quick Start with the Ouster Python SDK for introduction.

Note

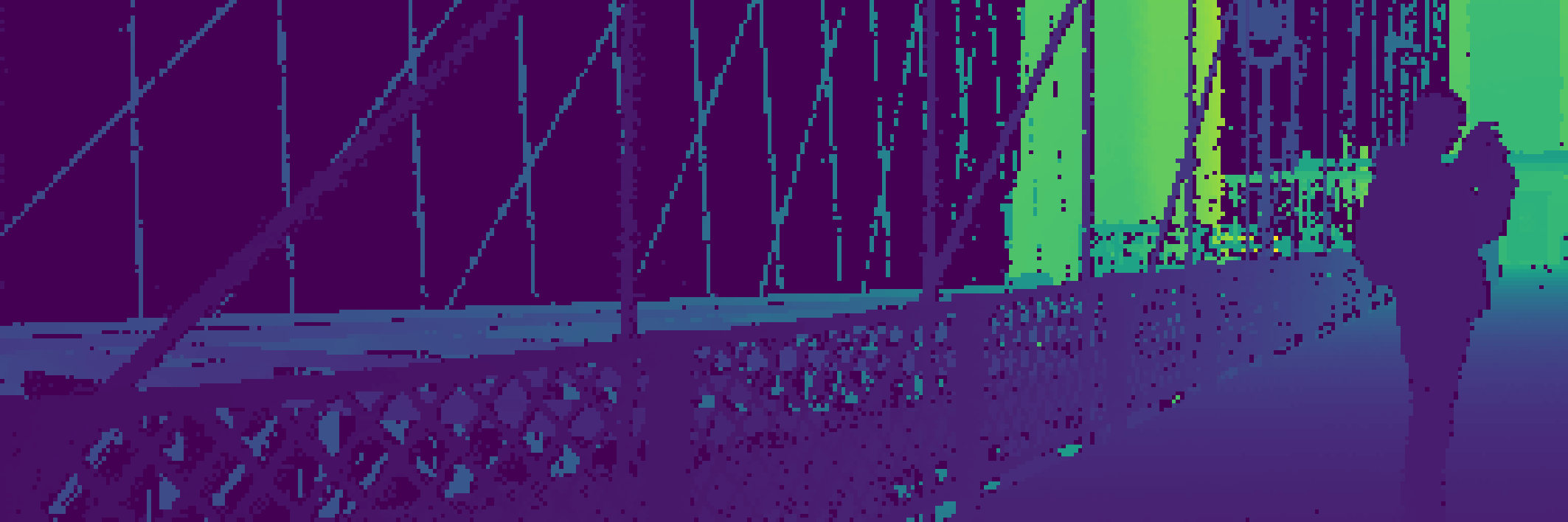

Below pictures were rendered using OS2 128 Rev 05 Bridge sample data.

Let’s read from source until we get to the 50th frame of data:

from contextlib import closing

from more_itertools import nth

with closing(client.Scans(source)) as scans:

scan = nth(scans, 50)

Note

If you’re using a sensor and it takes a few seconds, don’t be alarmed! It has to get to the 50th frame of data, which would be 5.0 seconds for a sensor running in 1024x10 mode.

We can extract the range measurements from the frame of data stored in the LidarScan

datatype and plot a range image where each column corresponds to a single azimuth angle:

range_field = scan.field(client.ChanField.RANGE)

range_img = client.destagger(info, range_field)

We can plot the results using standard Python tools that work with numpy datatypes. Here, we extract a column segment of the range data and display the result:

import matplotlib.pyplot as plt

plt.imshow(range_img[:, 640:1024], resample=False)

plt.axis('off')

plt.show()

Note

If running plt.show gives you an error about your Matplotlib backend, you will need a GUI

backend such as TkAgg or Qt5Agg in order to visualize your data with matplotlib.

Range image of OS2 bridge data. Data taken at Brooklyn Bridge, NYC.

In addition to viewing the data in 2D, we can also plot the results in 3D by projecting the range measurements into Cartesian coordinates. To do this, we first create a lookup table, then use it to produce X, Y, Z coordinates from our scan data with shape (H x W x 3):

xyzlut = client.XYZLut(info)

xyz = xyzlut(scan)

Now we rearrange the resulting numpy array into a shape that’s suitable for plotting:

import numpy as np

[x, y, z] = [c.flatten() for c in np.dsplit(xyz, 3)]

ax = plt.axes(projection='3d')

r = 10

ax.set_xlim3d([-r, r])

ax.set_ylim3d([-r, r])

ax.set_zlim3d([-r/2, r/2])

plt.axis('off')

z_col = np.minimum(np.absolute(z), 5)

ax.scatter(x, y, z, c=z_col, s=0.2)

plt.show()

Point cloud from OS2 bridge data with colormap on z. Data taken at Brooklyn Bridge, NYC.

You should be able to rotate the resulting scene to view it from different angles.

To learn more about manipulating lidar data, see: